Artificial General Intelligence: What It Is and Why It's Our Next Great Leap

The AGI Goalpost Has Moved. And That’s the Best Thing That Could Have Happened.

There’s a strange new mood settling over Silicon Valley, a quiet recalibration happening in the labs where the future is supposed to be forged. For years, the North Star for every top researcher at OpenAI, Google, and Meta has been the same: the creation of Artificial General Intelligence, or AGI. It’s the grand prize, the holy grail—a machine with the cognitive abilities of a human, capable of learning, reasoning, and creating across any domain. We’ve been told it’s the key to unlocking the next stage of civilization.

But lately, the tone has shifted. You can hear it in the candid interviews, you can read it between the lines of research papers. The conversation is changing from a sprint toward a god-like superintelligence to something far more grounded, far more useful, and frankly, far more exciting.

Some are calling this a failure, a sign that we’ve hit a wall. They see the skepticism from AI luminaries like Yann LeCun and Gary Marcus, who rightly point out that just making models bigger isn’t getting us to true consciousness, and they declare the dream dead.

I see the exact opposite. This isn't a retreat; it's a breakthrough in thinking. The goalpost for what is AGI in AI hasn't just moved—it's been placed on a field where we can actually score. And that changes everything.

The God in the Machine Can Wait

Let’s talk about Amjad Masad, the CEO of Replit. He recently put a name to this incredible shift, drawing a line in the sand between the philosophical dream of "true AGI" and the engineering reality of "functional AGI," arguing that The economy doesn't need true AGI, says Replit CEO. When I first read his comments, I honestly just sat back in my chair and smiled. This is the kind of clarity that reminds me why I got into this field in the first place.

Masad’s argument is beautifully simple. Do we really need an AI that can ponder the meaning of its own existence to revolutionize medicine, manufacturing, and science? Or do we need systems that can reliably and autonomously complete complex, verifiable tasks in the real world? He’s betting on the latter. Functional AGI doesn't need a soul; it just needs to work. It needs to be able to ingest a firehose of real-world data, learn from it, and then go out and do things—manage a supply chain, design a protein, write a complex piece of software.

Think of it like this: the quest for true AGI has been a bit like the ancient alchemists’ obsession with turning lead into gold. It's a profound, almost mystical goal that captures the imagination. But along the way, in their failed attempts to achieve the impossible, they accidentally discovered the principles of modern chemistry. They created something practical, powerful, and world-changing. Functional AGI is our modern chemistry. We’re stopping the obsessive hunt for a philosophical panacea and instead are getting our hands dirty building the tools that will define the next century.

Masad worries the industry might be caught in a "local maximum trap"—in simpler terms, it means we’re so focused on making small, profitable improvements to our current AI models that we’re missing the path to a real paradigm shift. But is it a trap, or is it an incredibly fertile valley? What if this "local maximum" is vast enough to contain solutions to climate change, disease, and resource scarcity? What if we spent the next decade building in this valley instead of staring up at an impossibly high peak we don't even know how to climb?

A More Human Timeline

This pragmatic turn isn't just coming from startup CEOs; it’s being echoed by the high priest of the AGI movement himself, OpenAI’s Sam Altman. In a recent interview, he predicted that AGI, when it comes, will "go whooshing by" and that society will prove far more adaptable than we fear. He expects "scary moments" and "bad stuff," as with any transformative technology, but he’s fundamentally optimistic about our ability to adjust.

That sentiment—that this will be a continuous process of integration, not a single, world-ending singularity—is the most important signal we could possibly get. It means the people at the absolute bleeding edge are thinking less about flipping a switch to turn on a super-brain and more about weaving this intelligence into the fabric of our lives. The speed of this is just staggering—it means the gap between a lab experiment and a tool you can use is closing faster than we can even comprehend, and our societal immune system will have to learn to adapt in real time.

This recalibration is a direct response to a growing realization in the field. Scaling laws—the idea that bigger models and more data automatically equal more intelligence—are hitting a point of diminishing returns. We’re running out of high-quality training data. As Yann LeCun has said, you can’t just assume more compute means smarter AI. This isn’t a dead end; it’s an invitation. It’s a challenge to the next generation of researchers to invent new architectures, to find more efficient ways to learn, to move beyond brute force and toward genuine elegance.

It’s the same leap our ancestors made when they moved from building bigger and bigger steam engines to inventing the electrical grid. It wasn't just about more power; it was about a fundamentally new kind of power—distributed, adaptable, and integrated. That’s the journey the AGI in AI field is on right now. And with that power comes immense responsibility. Altman’s acknowledgment of "scary stuff" isn't a bug; it's a feature of any truly profound technological shift. It's a call for us to build the ethical guardrails at the same time we’re building the engine, to have the conversation about governance and safety before it’s too late.

The Real Revolution is Just Beginning

For years, the definition of AGI has been a moving target, a vague, sci-fi concept that felt more like a philosophical debate than an engineering goal. By shifting the focus to "functional AGI," we’re finally giving ourselves a concrete mission. We’re trading a mythical destination for a tangible direction.

This isn’t a downgrade of our ambition. It’s an upgrade in our wisdom. It’s the moment the field grows up, moving past the adolescent dream of creating a god in a box and into the adult responsibility of building tools that empower all of humanity. This is where the magic happens. Not in the sudden flash of a machine waking up, but in the steady, accelerating hum of a million practical problems being solved, a million human lives being improved. The race isn't over; it just got a whole lot more interesting.

Related Articles

Qualcomm's AI Future: What the Stock Surge Really Means for Our Tech Future

Beyond the Banner: Why the Humble Cookie Is the Blueprint for Our Digital Future We’ve all been ther...

Fubo's Stock is Surging: What's Actually Happening and Why It's Probably a Trap

I just wanted to know what time SmackDown was on. Simple, right? A straightforward question for our...

The HVAC Racket: Repair vs. Replacement and How to Pick a Company That Won't Screw You Over

So, let me get this straight. The Los Angeles Angels, a professional baseball franchise supposedly w...

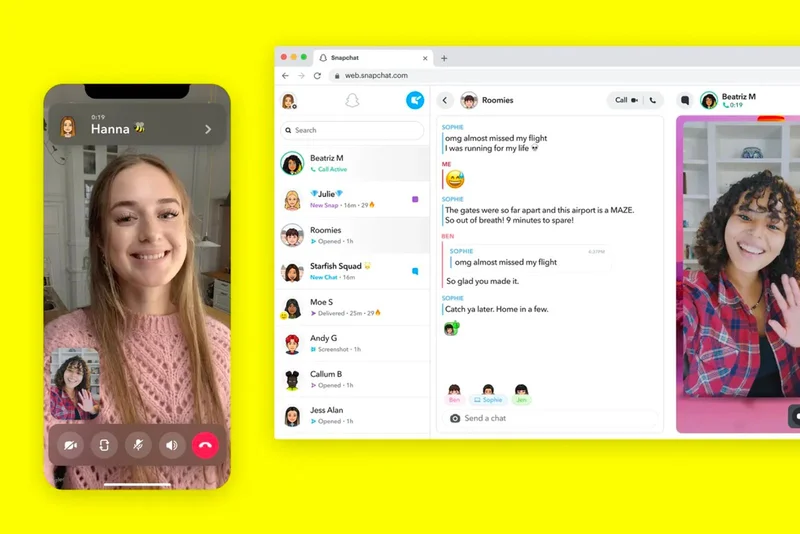

Snap Stock: Strong Forecast, Perplexity Deal... Seriously?

Snap's Perplexity Deal: Are We Officially Living in a Dystopia? Okay, so Snap's partnering with Perp...

The Future of Work: Navigating Remote Jobs, AI, and Finding Your Next Opportunity

Of course. Here is the feature article, written from the persona of Dr. Aris Thorne. * Sam Altman Is...

Google's Space Datacenters: What's the Point and Who Asked For This?

Google Wants to Put Data Centers in Space? Yeah, Right. Google, huh? Space data centers. Project Sun...